Is the Wrong Metric Misleading Your Marketing?

When it comes to measuring the effectiveness of marketing campaigns, what looks like a winner at first glance may actually be a loser upon further examination. Response rate is a metric commonly used for measuring the success of marketing campaigns. The problem is that it's misleading.

It was a pretty typical scenario. The marketing manager at one of our large enterprise clients showed me the results of their latest campaign. More than 20 percent of customers had responded to the new offer. The campaign was deemed a winner. In the marketer's mind it was time to launch the campaign more broadly and start thinking about similar offers.

The scenario was the same as hundreds I'd witnessed before, and for one simple reason: The majority of today's marketers rely on offer acceptance rate to evaluate their campaign effectiveness. The assumption is that the more redemptions, email-opens or clicks you get, the more successful your campaign is.

It's not surprising why. Acceptance rates are easy to report on, simple to understand and quick to calculate. At the same time, this is a metric that can be incredibly misleading.

Metrics such as open rates and click rates can provide important insight into brand strength and customer engagement, as well as aspects of an actual campaign execution e.g., the creative or the incentive.

The problem is that they provide no indication of what happened after the customer clicked. They can't tell you about the monetary uplift generated by the campaign. Moreover, they can't tell you about the potential cannibalistic effects of your marketing efforts.

Real-world evidence: positive reactions can have a negative impact on outcomes

Over the years I've found myself in more than one client debate over the topic of response rate, making the case for why offer acceptance rate can be such a misleading marketing metric. What I've lacked in these discussions is any hard evidence.

We recently looked at this issue and what we uncovered was proof that response rate as a marketing metric can be deceptive. Let me walk you through the real-world example.

We examined the marketing of one of our large enterprise B2C clients. Our goal was to explore the correlation between the acceptance rate of offers and the impact of the offers on actual profitability.

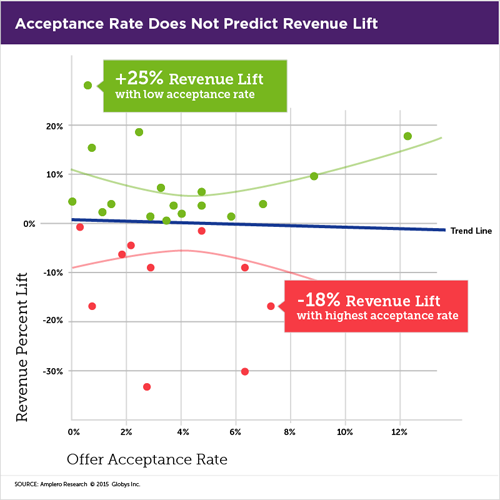

What we wanted to do is determine whether or not acceptance rates predict revenue lift. More specifically, we wanted to explore whether offers with low acceptance rate can have a strong positive impact on revenue lift, and whether offers with high acceptance rate can have a strong negative impact on revenue lift, thus getting at this question of reliability.

Our client was executing trigger-based marketing campaigns to several million consumer customers via SMS. The campaigns utilized a mix of messages, offers and incentives, and the incentives offered customers a variety of bonus credits based on a range of purchase behaviors.

The testing of all of the different customer experience variants was performed using control groups. For each customer that received an offer, a control was used so that a comparison could determine revenue lift.

The metric we used was total realized revenue 14 days post the marketing.

Here's what we found.

1. There was no correlation between acceptance rates and revenue lift. You can see in the graph below that the slope of the line is not statistically different from that of zero. What this confirmed is that acceptance rates do not shed insight on long-term business KPIs, in this case 14 days trailing revenue. Does your campaign positively or negatively impact revenue over the long term? Acceptance rates can't help you answer this question.

2. Response rate can mislead your marketing. It's entirely possible to have a high acceptance rate and a negative impact on revenue. The opposite can also be true - low acceptance rates can have strong positive revenue lift. What we showed our client was that one of their offers having the lowest acceptance rate (<1 percent) correlated with the highest revenue lift (25 percent), while an offer acceptance rate of 7 percent, which they had deemed a winner, had an 18 percent negative impact on revenue.

3. Incentive type matters. Why? Because incentive is closed linked to how cannibalizing an offer may be. We all know that marketing can be highly cannibalistic. What we found is that some incentives with high acceptance rates had very negative impact on revenue lift. Included in the study were two offers that had the same call to action but different bonuses. One generated positive lift while the other highly negative. The difference was due to a cannibalization effect.

4. Beware of big bonuses. For some of the offers, the client offered bonus credits for future product use. After running the numbers, we learned that the bonuses awarded were routinely too large for a specific call to action. What the study showed is that it doesn't matter how large the bonus is that you give to customers to incent behavior as long as it is within a minimum acceptable range. Of course this requires being to test a broad range of incentives and getting a statistically valid measurement on which ones impact performance.

Leveraging machine learning to understand something more meaningful

Like our client, if you've been using offer acceptance rate to measure the effectiveness of your marketing, it's time to ask yourself whether you're getting a read on what you really care about.

What some marketers are realizing is that more advanced testing capabilities combined with machine learning allow you to optimize customer interactions according to long-term KPIs, going well beyond response rate.

The ability for marketers to leverage more rigorous and automated experimental design allows you to measure matched target and control lift for your KPI of choice, whether you have a revenue, engagement, retention or loyalty objective. Furthermore, it allows you the flexibility to optimize performance against more than one KPI simultaneously. For some customers you may want to trade short term revenue for a longer term retention benefit. The key is being able to weigh those tradeoffs and adapt your marketing efforts accordingly.

The real power of controlled experimentation combined with machine learning is that it provide marketers much better insight into the actual outcomes of marketing efforts - resulting in smarter, faster decisions about how to engage each individual.

Today most marketers are convinced they need hundreds of data scientists to construct these types of controlled experiments, test for statistical significance and measure the outcomes. What they don't realize is that machine learning marketing platforms can do this for them.

You don't have to use response rate as a proxy for how your marketing efforts impact revenue or retention. If what you really want to do is understand the outcomes of your marketing and automatically optimize for long-term performance indicators, consider the fact that machine learning can yield measurement that is more meaningful. Also consider that both your marketing and your bottom line will benefit.

Subscribe to Our Newsletter!

Latest in Marketing