Fight Spammy Links with New Webmaster Tool

While building quality links can be a difficult task, avoiding suspicious or spammy links can be even more of a challenge.

This is why Bing has been hard at work to create a feature that enables Web workers to notify the search engine when a suspicious source has linked to their content. This new tool basically enables users to tell Bing that they want to distance themselves from a specific site. However, that isn't the only news from Bing. The company has also made some announcements in regards to their recently launched SEO tool, SEO Analyzer.

Learn more about both of these new tools and their features below:

Disavow Links

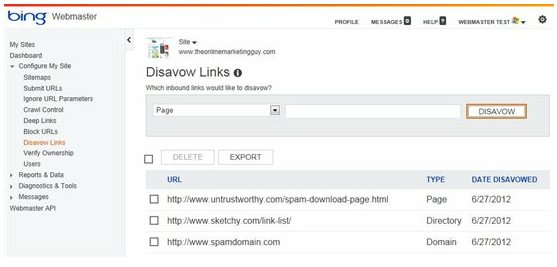

Disavow Links, which is a new feature from Bing Webmaster Tools, offers Web workers a way to protect their website from malicious, or just pesky, links.

With Disavow Links, users can quickly alert Bing about links that aren't trusted. Users can submit page, directory, or domain URLs that contain links to their site that seem unnatural, spammy or appear to be from low quality sites.

This feature is found in the "Configure Your Site" section of the navigation. When reporting a suspicious link, users can choose to input signals to Bing at the page level, directory level or domain level. Then, Bing notes the type of location (page, directory or domain) as well as the date that they were notified of the action. Furthermore, there is no limit on the number of links users can disavow with this tool.

According to Bing, users shouldn't expect a dramatic change in their rankings as a result of using this feature. However, the information that is shared helps Bing more clearly understand what users think about specific sources that are linking to their content.

SEO Analyzer Updates

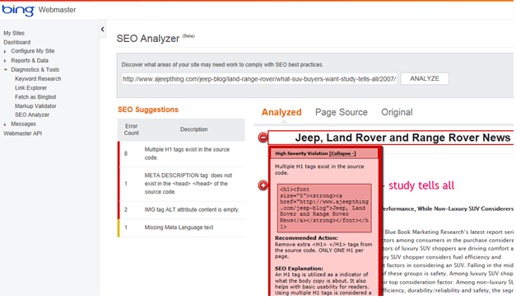

Bing also released some announcements in regards to the company's recently launched tool SEO Analyzer, which can be used to scan URLs and build reports based on compliance with SEO best practices.

According to Bing, when users leverage the SEO Analyzer to fetch a webpage from their site and do a real-time scan for best practices compliance, they should note that this action will ignore any robots.txt directives that have been set in place. While Bingbot still follows robots.txt directives as normal, users that want to test a robots.txt compliance command must use the "Fetch as Bingbot" feature.

Additionally, SEO Analyzer can now follow redirects on websites. Bing states that this means if users give SEO Analyzer an active URL, the tool will follow the redirect and compose its report based on the first page it reaches that returns a 200 OK code header response. Users also have the ability to view each hop in the redirect change with a Show/Hide link, which displays each URL and response code.

Furthermore, the tool follows up to six redirects - which hopefully shouldn't all be needed.