The Extent of Keyword Not Provided & Some Practical Solutions

Google dropped a virtual bomb on search engine optimization professionals (all Web businesses really) when it announced in October 2011 that it would begin encrypting search queries. The result was that visits from organic listings no longer included information about individual queries. As you might imagine, not providing referring keyword data sent shockwaves through the industry and the force of that change has since left many struggling to regain their digital balance.

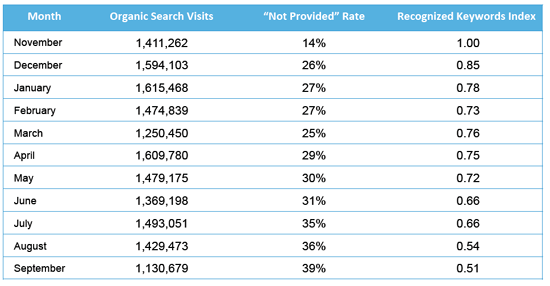

Just how bad is the problem? One of the most highly cited studies of the past few weeks has come from digital marketing software provider Optify. The company examined 400+ business-to-business websites over the past 11 months, analyzing over 17 million visits from organic search and capturing a total of roughly 7.2 million keywords. As you can see in the graph below, the rate of keyword "not provided" now reaches close to 40 percent of all Google searches - an increase of almost 171 percent since being introduced.

The impact has been massive. According to the Optify study, 81 percent of companies analyzed in the study currently see over 30 percent of their traffic from Google as "not provided" and 64 percent see between 30 and 50 percent of their traffic as "not provided". For many 'Net professionals that Website Magazine has spoken with directly, that "not provided" rate actually higher - and is in some cases closer to 50 percent.

So, what does this all mean for search engine optimization professionals? What steps can digital-focused businesses take to mitigate this rather substantial data loss? Optify did provide a few suggestions in its reoprt, but as it stands today, the "not provided" problem is expected only to get worse (unless you're an advertiser, of course). Fortunately, SEO's can make due with what they have. While Google isn't sharing all the data, it is still managing to share some (roughly 60 percent) - and for many, a sample of the total data is sufficient (or at least enough to get a general understanding of keyword performance).

The best and most practical option is to simply use Google's Webmaster Tools. As if you needed another reason to use the product/platform, Google graciously gives SEO's access to the top 1,000 daily search queries and top 1,000 daily landing pages for the past 30 days. Since you can export files from GWT it is possible to compare month over month traffic gains/losses on a keyword level. That's a good thing for SEO's but it's just not enough.

The problem with reviewing data in aggregate is that it is impossible to associate referring keywords with website behavior like time on site or page views. Optify suggests using "proxies" such as keyword rank and ranked page to estimate individual keyword performance. Here's how to do that:

1) Sort leads from organic search by entry page

2) Pull keywords that drive traffic (available from Webmaster Tools) to that page

3) Using rank and known CTR for each keyword, estimate traffic to each page by keyword.

4) Using estimated traffic per keyword and known leads per page, estimate the conversion rate per keyword and tied it to other performance data at the keyword level.