Periscope Unveils New Comment Moderation System

Periscope is hitting back against spam and abuse by unveiling a new comment moderation system for its platform.

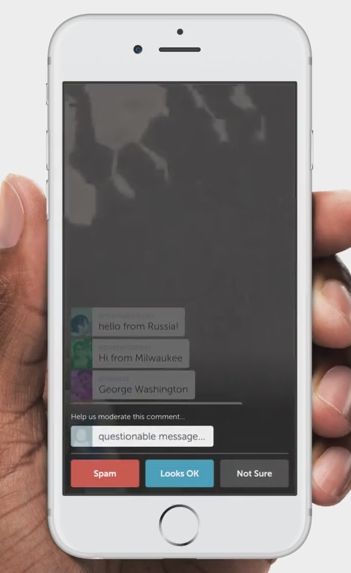

According to Periscope, the openness of its live and unfiltered app comes with an increased risk for spam and abuse. To keep its community safe, the company has been working on a comment moderation system that is transparent, community-led and live. The result is a comment moderation system that empowers its community to report and vote on comments they consider to be spam or abuse.

The system works by enabling viewers to report comments as spam or abuse during a broadcast. The viewer that reports the comment will no longer see messages from that commenter for the remainder of the broadcast. Plus, the system will identify commonly reported phrases.

It is also important to note that when a comment is reported, a few viewers are randomly selected to vote on whether they think the comment is spam or abuse. The result of the vote is then shown to voters and if the majority votes that the comment is spam or abuse, the commenter will be notified that their ability to chat in the broadcast is temporarily disabled. What's more, repeat offenses will result in chat being disabled for that commenter for the remainder of the broadcast.

Periscope notes that the new moderation system is lightweight and the entire process should last just a matter of seconds. Those that don't want to participate in the system can elect to not have their broadcasts moderated and viewers can opt out of voting from their settings. Moreover, users have the ability to leverage Periscope's other community tools, such as reporting ongoing harassment or abuse, blocking and removing people from their broadcasts and restricting comments to people they know.

Subscribe to Our Newsletter!

Latest in Social Media